Day 5: Convolutional Neural Networks Tutorial

Today we will talk about one of the most important deep learning architectures, the "master algorithm" in computer vision. That is how François Chollet, author of Keras, calls convolutional neural networks (CNNs). Convolutional network is an architecture that, like other artificial neural networks, has a neuron as its core building block. It is also differentiable, so the network is conveniently trained via backpropagation. The distinctive feature of CNNs, however, is the connection topology, resulting in sparsely connected convolutional layers with neurons sharing their weights.

First, we are going to build an intuition behind CNNs. Then, we are taking a close look at a classic CNN architecture. After discussing the differences between convolutional layer types, we are going to implement a convolutional network in Haskell. We will see that on handwritten digits our CNN achieves a twice lower test error, compared to the fully-connected architecture. We will build up on what we have learned during the previous days, so do not hesitate to refresh your memory first.

Previous posts

Day 1: Learning Neural Networks The Hard Way Day 2: What Do Hidden Layers Do? Day 3: Haskell Guide To Neural Networks Day 4: The Importance Of Batch Normalization

Convolution operator

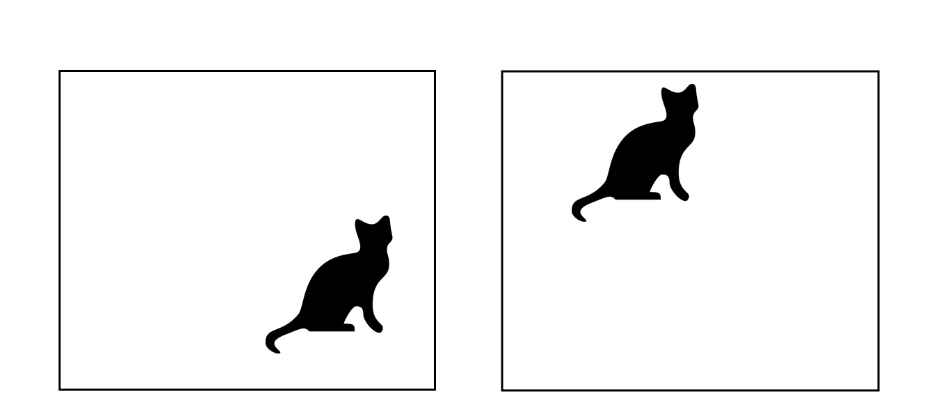

Previously, we have learned about fully-connected neural networks. Although, theoretically those can approximate any reasonable function, they have certain limitations. One of the challenges is to achieve the translation symmetry. To explain this, let us take a look at the two cat pictures below.